Convolutional Neural Network

2022. 9. 8. 16:23ㆍDL/DL_Network_cal

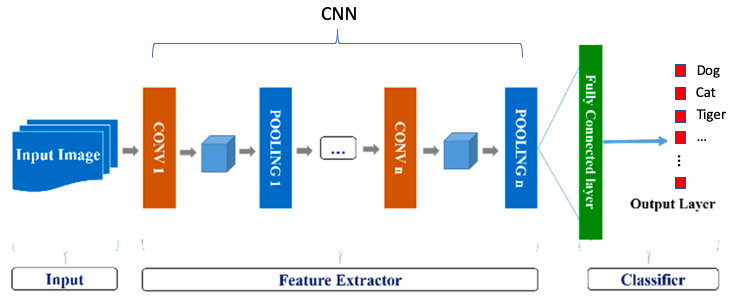

Modules of Classifier

Feature Extrator => Convolution Layer + Pooling Layer → output = Feature vector

Classifier => Dense Layer → output = Class Scores

Feature를 뽑아내는 이유

input의 image를 classifier로 바로 넣어 분류하기 어려움 (성능 ↓)

→ Feature를 만들기 시작

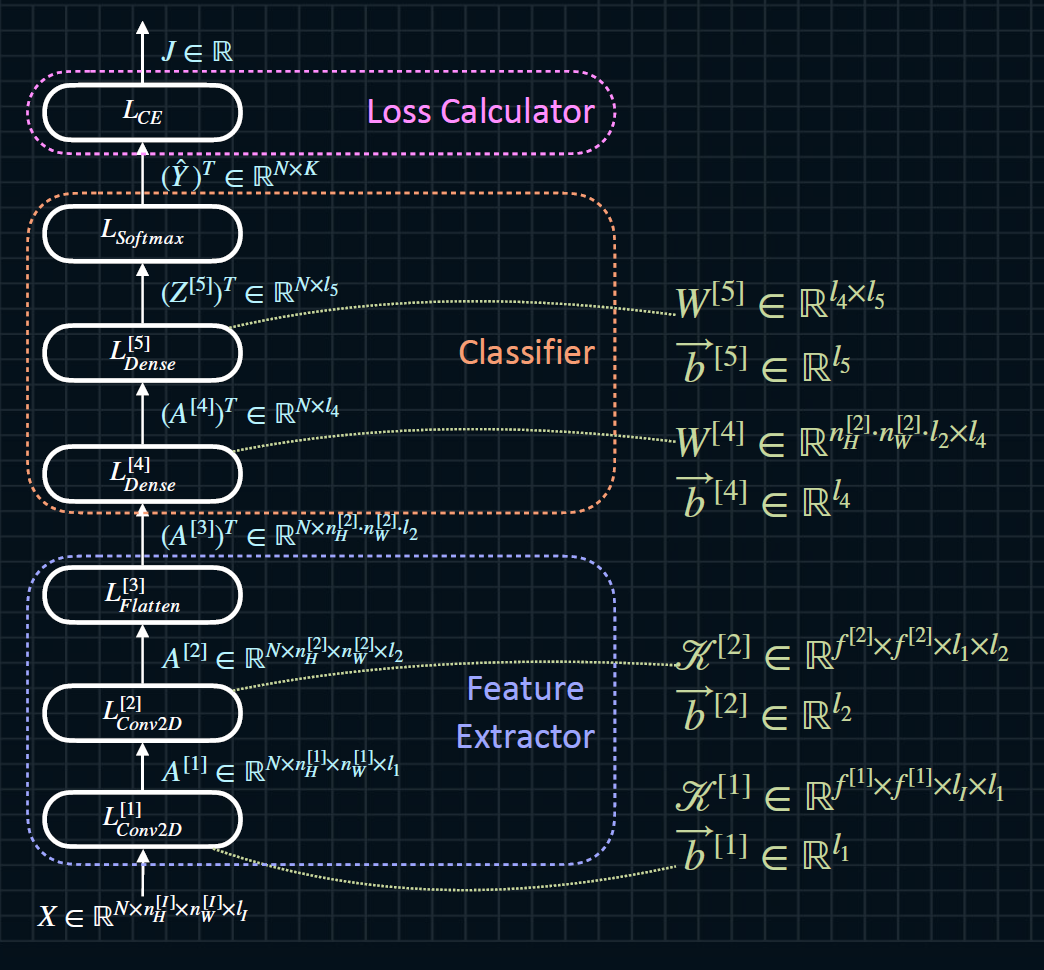

Shapes in the Classifier

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

from tensorflow.keras.layers import Dense

n_neurons = [50, 25, 10]

dense1 = Dense(units=n_neurons[0], activation='relu')

dense2 = Dense(units=n_neurons[1], activation='relu')

dense3 = Dense(units=n_neurons[2], activation='softmax')

print("Input feature: {}".format(x.shape))

# input shape = (32, 245)

# W, B = (245, neuron의 갯수), (neuron의 갯수)

# output shape = (32, neuron의 갯수)

x = dense1(x)

W, B = dense1.get_weights()

print("W/B: {}/{}".format(W.shape, B.shape))

print("After dense1: {}\n".format(x.shape))

x = dense2(x)

W, B = dense2.get_weights()

print("W/B: {}/{}".format(W.shape, B.shape))

print("After dense2: {}\n".format(x.shape))

x = dense3(x)

W, B = dense3.get_weights()

print("W/B: {}/{}".format(W.shape, B.shape))

print("After dense3: {}\n".format(x.shape))

|

cs |

results

Input feature: (32, 245)

W/B: (245, 50)/(50,)

After dense1: (32, 50)

W/B: (50, 25)/(25,)

After dense2: (32, 25)

W/B: (25, 10)/(10,)

After dense3: (32, 10)

Shapes in the Feature Extractors

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

N, n_H, n_W, n_C = 32, 28, 28, 3

n_conv_filter = 5

k_size = 3

pool_size, pool_strides = 2, 2

batch_size = 32

x = tf.random.normal(shape=(N, n_H, n_W, n_C))

conv1 = Conv2D(filters = n_conv_filter, kernel_size = k_size, padding='same',activation = 'relu')

conv1_pool = MaxPooling2D(pool_size=pool_size, strides = pool_strides)

conv2 = Conv2D(filters = n_conv_filter, kernel_size = k_size, padding='same',activation = 'relu')

conv2_pool = MaxPooling2D(pool_size=pool_size, strides = pool_strides)

flatten = Flatten()

print("Input: {}\n".format(x.shape))

#conv layer는 padding = 'same'이라 input,output shape이 같다

#pooling layer의 output shape = (n_H + 2p -f)/s+1

x = conv1(x)

W, B = conv1.get_weights()

# W = (kernel_size, kernel_size, channel, filter), B = (filter)

print("W/B: {}/{}".format(W.shape, B.shape))

print("After conv1: {}".format(x.shape))

x = conv1_pool(x)

print("After conv1_pool: {}".format(x.shape))

x = conv2(x)

W, B = conv2.get_weights()

print("W/B: {}/{}".format(W.shape, B.shape))

print("After conv2: {}".format(x.shape))

x = conv2_pool(x)

print("After conv2_pool: {}".format(x.shape))

# 32개의 vector가 만들어짐

x = flatten(x)

print("After flatten: {}".format(x.shape))

|

cs |

results

Input: (32, 28, 28, 3)

W/B: (3, 3, 3, 5)/(5,)

After conv1: (32, 28, 28, 5)

After conv1_pool: (32, 14, 14, 5)

W/B: (3, 3, 5, 5)/(5,)

After conv2: (32, 14, 14, 5)

After conv2_pool: (32, 7, 7, 5)

After flatten: (32, 245)

Shapes in the Loss Functions

|

1

2

3

4

5

6

7

8

9

10

11

12

|

from tensorflow.keras.losses import CategoricalCrossentropy

# input = (32,10), class 10개

y = tf.random.uniform(minval = 0, maxval = 10, shape=(32, ), dtype=tf.int32)

print(y)

# depth = class의 갯수

y = tf.one_hot(y, depth=10)

print(y.shape)

loss_object = CategoricalCrossentropy()

loss = loss_object(y,x)

print(loss.shape)

print(loss)

|

cs |

results

tf.Tensor([3 5 6 3 8 7 9 9 1 0 5 6 4 2 6 6 1 6 6 2 6 7 5 1 7 0 1 1 3 7 9 6], shape=(32,), dtype=int32)

(32, 10)

()

tf.Tensor(3.3937004, shape=(), dtype=float32)

Convolutional Neural Networks

CNN의 기본적인 구조 (Loss func이 추가됨)

Implementation with Sequential Method

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

N, n_H, n_W, n_C = 4, 28, 28, 3

n_conv_neurons = [10, 20, 30]

n_dense_neurons = [50, 30,10]

k_size, padding = 3, 'same'

pool_size, pool_strides = 2, 2

activation = 'relu'

x = tf.random.normal(shape=(N, n_H, n_W, n_C))

print(x.shape)

# Sequential 장점 layer를 추가하기만 하면 되기에 만들기 간편

# 단점 중간에 Sequential한 commission이 아니라 다른 식의 텐서 연산은 안됨

model= Sequential()

model.add(Conv2D(filters=n_conv_neurons[0], kernel_size = k_size, padding = padding, activation = activation))

model.add(MaxPooling2D(pool_size = pool_size, strides = pool_strides))

model.add(Conv2D(filters=n_conv_neurons[1], kernel_size = k_size, padding = padding, activation = activation))

model.add(MaxPooling2D(pool_size = pool_size, strides = pool_strides))

model.add(Conv2D(filters=n_conv_neurons[2], kernel_size = k_size, padding = padding, activation = activation))

model.add(MaxPooling2D(pool_size = pool_size, strides = pool_strides))

model.add(Flatten())

model.add(Dense(units=n_dense_neurons[0], activation = activation))

model.add(Dense(units=n_dense_neurons[1], activation = activation))

model.add(Dense(units=n_dense_neurons[2], activation = 'softmax'))

predictions = model(x)

print(predictions.shape)

|

cs |

results

(4, 28, 28, 3)

(4, 10)

↑ Sequential부분을 for문으로 더 간단하게 정리

|

1

2

3

4

5

6

7

8

|

model= Sequential()

for n_conv_neuron in n_conv_neurons:

model.add(Conv2D(filters=n_conv_neuron, kernel_size = k_size, padding = padding, activation = activation))

model.add(MaxPooling2D(pool_size = pool_size, strides = pool_strides))

model.add(Flatten())

for n_dense_neuron in n_dense_neurons:

model.add(Dense(units=n_dense_neuron, activation = activation))

model.add(Dense(units=n_dense_neurons[-1], activation = 'softmax'))

|

cs |

Implementation with Model Sub-classing

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

|

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

class TestCNN(Model):

def __init__(self):

super(TestCNN, self).__init__()

self.conv1 = Conv2D(filters=n_conv_neurons[0], kernel_size = k_size, padding = padding, activation = activation)

self.conv1_pool = MaxPooling2D(pool_size = pool_size, strides = pool_strides)

self.conv2 = Conv2D(filters=n_conv_neurons[1], kernel_size = k_size, padding = padding, activation = activation)

self.conv2_pool = MaxPooling2D(pool_size = pool_size, strides = pool_strides)

self.conv3 = Conv2D(filters=n_conv_neurons[2], kernel_size = k_size, padding = padding, activation = activation)

self.conv3_pool = MaxPooling2D(pool_size = pool_size, strides = pool_strides)

self.flatten = Flatten()

self.dense1 = Dense(units=n_dense_neurons[0], activation = activation)

self.dense2 = Dense(units=n_dense_neurons[1], activation = activation)

self.dense3 = Dense(units=n_dense_neurons[2], activation = 'softmax')

def call(self, x):

print(x.shape)

x = self.conv1(x)

print(x.shape)

x = self.conv1_pool(x)

print(x.shape)

x = self.conv2(x)

print(x.shape)

x = self.conv2_pool(x)

print(x.shape)

x = self.conv3(x)

print(x.shape)

x = self.conv3_pool(x)

print(x.shape)

x = self.flatten(x)

print(x.shape)

x = self.dense1(x)

print(x.shape)

x = self.dense2(x)

print(x.shape)

x = self.dense3(x)

print(x.shape)

return x

N, n_H, n_W, n_C = 4, 28, 28, 3

n_conv_neurons = [10, 20, 30]

n_dense_neurons = [50, 30,10]

k_size, padding = 3, 'same'

pool_size, pool_strides = 2, 2

activation = 'relu'

x = tf.random.normal(shape=(N, n_H, n_W, n_C))

model = TestCNN()

y = model(x)

print(y.shape)

|

cs |

Implementation with Sequential + Layer Sub-classing

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Layer

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

# Layer Sub-classing

# Sequential로 만들면 layer갯수만큼 다 작성해야함, layer가 많아질수록 코드가 간단해짐

class MyConv(Layer):

def __init__(self, n_neuron):

super(MyConv, self).__init__()

self.conv = Conv2D(filters=n_neuron, kernel_size = k_size, padding = padding, activation = activation)

self.conv_pool = MaxPooling2D(pool_size = pool_size, strides = pool_strides)

def call(self, x):

x = self.conv(x)

x = self.conv_pool(x)

return x

class TestCNN(Model):

def __init__(self):

super(TestCNN, self).__init__()

self.conv1 = MyConv(n_conv_neurons[0])

self.conv2 = MyConv(n_conv_neurons[1])

self.conv3 = MyConv(n_conv_neurons[2])

self.flatten = Flatten()

self.dense1 = Dense(units=n_dense_neurons[0], activation = activation)

self.dense2 = Dense(units=n_dense_neurons[1], activation = activation)

self.dense3 = Dense(units=n_dense_neurons[2], activation = 'softmax')

def call(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.flatten(x)

x = self.dense1(x)

x = self.dense2(x)

x = self.dense3(x)

return x

|

cs |

Implementation with Model and Layer Sub-classing

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Layer

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

# Layer Sub-classing

# Sequential로 만들면 layer갯수만큼 다 작성해야함, layer가 많아질수록 코드가 간단해짐

class MyConv(Layer):

def __init__(self, n_neuron):

super(MyConv, self).__init__()

self.conv = Conv2D(filters=n_neuron, kernel_size = k_size, padding = padding, activation = activation)

self.conv_pool = MaxPooling2D(pool_size = pool_size, strides = pool_strides)

def call(self, x):

x = self.conv(x)

x = self.conv_pool(x)

return x

model = Sequential()

# n_conv_neurons[] -> MyConv의 n_neuron자리에 들어감

model.add(MyConv(n_conv_neurons[0]))

model.add(MyConv(n_conv_neurons[1]))

model.add(MyConv(n_conv_neurons[2]))

model.add(Flatten())

model.add(Dense(units=n_dense_neurons[0], activation = activation))

model.add(Dense(units=n_dense_neurons[1], activation = activation))

model.add(Dense(units=n_dense_neurons[2], activation = 'softmax'))

|

cs |

call함수 부분에 Sequential 추가로 간단하게 정리

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Layer

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

class MyConv(Layer):

def __init__(self, n_neuron):

super(MyConv, self).__init__()

self.conv = Conv2D(filters=n_neuron, kernel_size = k_size, padding = padding, activation = activation)

self.conv_pool = MaxPooling2D(pool_size = pool_size, strides = pool_strides)

def call(self, x):

x = self.conv(x)

x = self.conv_pool(x)

return x

class TestCNN(Model):

def __init__(self):

super(TestCNN, self).__init__()

self.fe = Sequential()

self.fe.add(MyConv(n_conv_neurons[0]))

self.fe.add(MyConv(n_conv_neurons[1]))

self.fe.add(MyConv(n_conv_neurons[2]))

self.fe.add(Flatten())

self.classifier = Sequential()

self.classifier.add(Dense(units=n_dense_neurons[0], activation = activation))

self.classifier.add(Dense(units=n_dense_neurons[1], activation = activation))

self.classifier.add(Dense(units=n_dense_neurons[2], activation = 'softmax'))

def call(self, x):

# x = self.conv1(x)

# x = self.conv2(x)

# x = self.conv3(x)

# x = self.flatten(x)

x = self.fe()

# x = self.dense1(x)

# x = self.dense2(x)

# x = self.dense3(x)

x = self.classifier()

return x

|

cs |

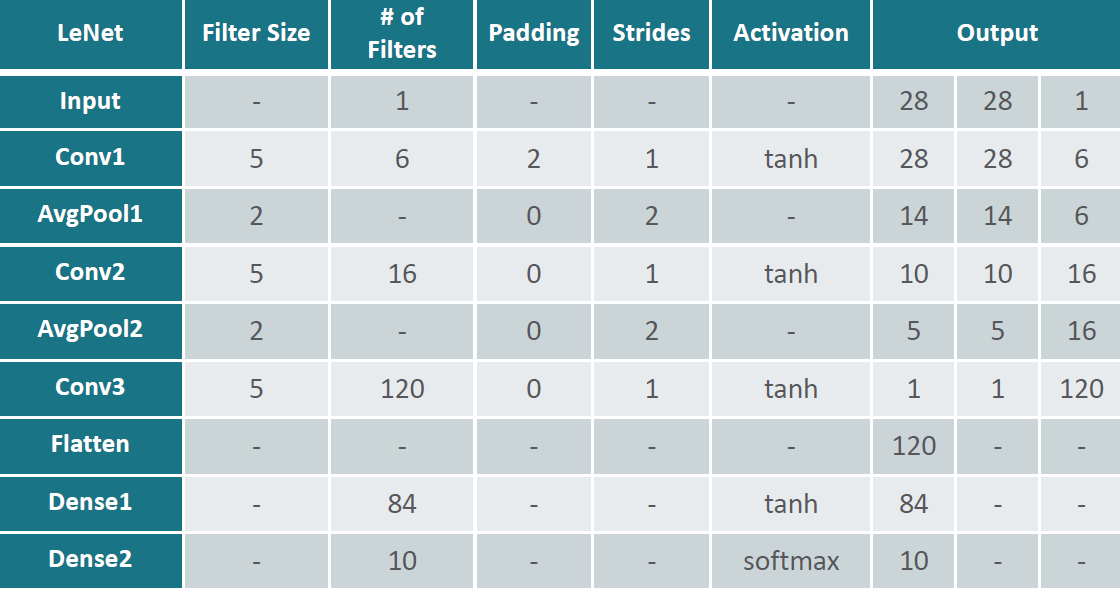

LeNet

LeNet with Model Sub-classing

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import AveragePooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

class LeNet(Model):

def __init__(self):

super(LeNet, self).__init__()

# feature extractor

self.conv1 = Conv2D(filters = 6, kernel_size = 5, padding = 'same', activation = 'tanh')

self.conv1_pool = AveragePooling2D(pool_size = 2, strides = 2)

self.conv2 = Conv2D(filters = 16, kernel_size = 5, padding = 'valid',activation = 'tanh')

self.conv2_pool = AveragePooling2D(pool_size = 2, strides = 2)

self.conv3 = Conv2D(filters = 120, kernel_size = 5, padding = 'valid',activation = 'tanh')

self.flatten = Flatten()

# classifier

self.dense1 = Dense(units = 84, activation = 'tanh')

self.dense2 = Dense(units = 10, activation = 'softmax')

def call(self, x):

print("x: {}".format(x.shape))

x = self.conv1(x)

print("x: {}".format(x.shape))

x = self.conv1_pool(x)

print("x: {}".format(x.shape))

x = self.conv2(x)

print("x: {}".format(x.shape))

x = self.conv2_pool(x)

print("x: {}".format(x.shape))

x = self.conv3(x)

print("x: {}".format(x.shape))

x = self.flatten(x)

print("x: {}".format(x.shape))

x = self.dense1(x)

print("x: {}".format(x.shape))

x = self.dense2(x)

print("x: {}".format(x.shape))

return x

model = LeNet()

x = tf.random.normal(shape=(32, 28, 28, 1))

predictions = model(x)

|

cs |

results

x: (32, 28, 28, 1)

x: (32, 28, 28, 6)

x: (32, 14, 14, 6)

x: (32, 10, 10, 16)

x: (32, 5, 5, 16)

x: (32, 1, 1, 120)

x: (32, 120)

x: (32, 84) x: (32, 10)

LeNet with Hybrid Method

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

|

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Layer

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import AveragePooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

class ConvLayer(Layer):

# filters와 padding은 바뀜

# conv3는 pooling layer가 없으므로 조건넣어줌

def __init__(self, filters, padding, pool=True):

super(ConvLayer, self).__init__()

self.pool = pool

self.conv = Conv2D(filters = 6, kernel_size = 5, padding = 'same', activation = 'tanh')

if pool == True:

self.conv_pool = AveragePooling2D(pool_size = 2, strides = 2)

def call(self, x):

x = self.conv(x)

if self.pool == True:

x = self.conv_pool(x)

return x

class LeNet(Model):

def __init__(self):

super(LeNet, self).__init__()

# feature extractor

self.conv1 = ConvLayer(filters = 6, padding = 'same')

self.conv2 = ConvLayer(filters = 16, padding = 'valid')

self.conv3 = ConvLayer(filters = 120, padding = 'valid', pool = False)

self.flatten = Flatten()

# classifier

self.dense1 = Dense(units = 84, activation = 'tanh')

self.dense2 = Dense(units = 10, activation = 'softmax')

def call(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.flatten(x)

x = self.dense1(x)

x = self.dense2(x)

return x

model = LeNet()

x = tf.random.normal(shape=(32, 28, 28, 1))

predictions = model(x)

|

cs |

Hybrid Method → Model + Layer Sub-classing

Forward Propagation of LeNet

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|

import tensorflow as tf

import numpy as np

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Layer

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import AveragePooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

from tensorflow.keras.datasets import mnist

from tensorflow.keras.losses import SparseCategoricalCrossentropy

#### LeNet Implementation ####

class ConvLayer(Layer):

def __init__(self, filters, padding, pool=True):

super(ConvLayer, self).__init__()

self.pool = pool

self.conv = Conv2D(filters = 6, kernel_size = 5, padding = 'same', activation = 'tanh')

if pool == True:

self.conv_pool = AveragePooling2D(pool_size = 2, strides = 2)

def call(self, x):

x = self.conv(x)

if self.pool == True:

x = self.conv_pool(x)

return x

class LeNet(Model):

def __init__(self):

super(LeNet, self).__init__()

# feature extractor

self.conv1 = ConvLayer(filters = 6, padding = 'same')

self.conv2 = ConvLayer(filters = 16, padding = 'valid')

self.conv3 = ConvLayer(filters = 120, padding = 'valid', pool = False)

self.flatten = Flatten()

# classifier

self.dense1 = Dense(units = 84, activation = 'tanh')

self.dense2 = Dense(units = 10, activation = 'softmax')

def call(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.flatten(x)

x = self.dense1(x)

x = self.dense2(x)

return x

###Dataset Preparation ####

(train_images, train_labels), _ = mnist.load_data()

# 마지막 컬러 채널 추가

train_images = np.expand_dims(train_images, axis=3).astype(np.float32)

train_labels = train_labels.astype(np.int32)

# dataset만들기

train_ds = tf.data.Dataset.from_tensor_slices((train_images, train_labels))

train_ds = train_ds.batch(32)

####Forward Propagation ####

model = LeNet()

loss_object = SparseCategoricalCrossentropy()

for images, labels in train_ds:

predictions = model(images)

loss = loss_object(labels, predictions)

print(loss)

break

|

cs |

참고 및 출처 : https://towardsdatascience.com/convolutional-autoencoders-for-image-noise-reduction-32fce9fc1763 ,

'DL > DL_Network_cal' 카테고리의 다른 글

| Pooling Layer (0) | 2022.08.31 |

|---|---|

| Loss Function (0) | 2022.08.25 |

| Classifier, Softmax Layer (0) | 2022.08.17 |

| Artificial Neuron (0) | 2022.08.14 |